I’m back with another edition of my infamous Gaming “Hot Takes!” I’ve officially given up on numbering these; I think this might be piece number four or five, but I’ve made several other posts over the last few years in which I share a few of my “hot takes” on gaming and the games industry in general. As I’ve said before, it’s never long before something in the world of gaming comes along to prompt another “hot take,” so that’s what we’re gonna look at today!

Video games are… well, they’re pretty darn good, to be honest with you. And I always like to make sure you know that I’m not some kind of “hater;” I like playing video games, and there are some titles that I genuinely believe eclipse films and TV shows in terms of their world-building, storytelling, or just pure entertainment value. We’re going to tackle some controversial topics today, though!

Before we take a look at the “hot takes,” I have a couple of important caveats. Firstly, I’m well aware that some or all of these points are the minority position, or at least contentious. That’s why they’re called “hot takes” and not “very obvious takes that everyone will surely agree with!” Secondly, this isn’t intended to be taken too seriously, so if I criticise a game or company you like, just bear that in mind. Finally, all of this is the entirely subjective, not objective, opinion of just one person.

Although gamers can be a cantankerous bunch, I still like to believe that there’s enough room – and enough maturity – in the wider community for respectful discussion and polite disagreement that doesn’t descend into name-calling and toxicity! So let’s all try to keep that in mind as we jump into the “hot takes,” eh?

“Hot Take” #1:

If your game is still in “early access,” you shouldn’t be allowed to sell DLC.

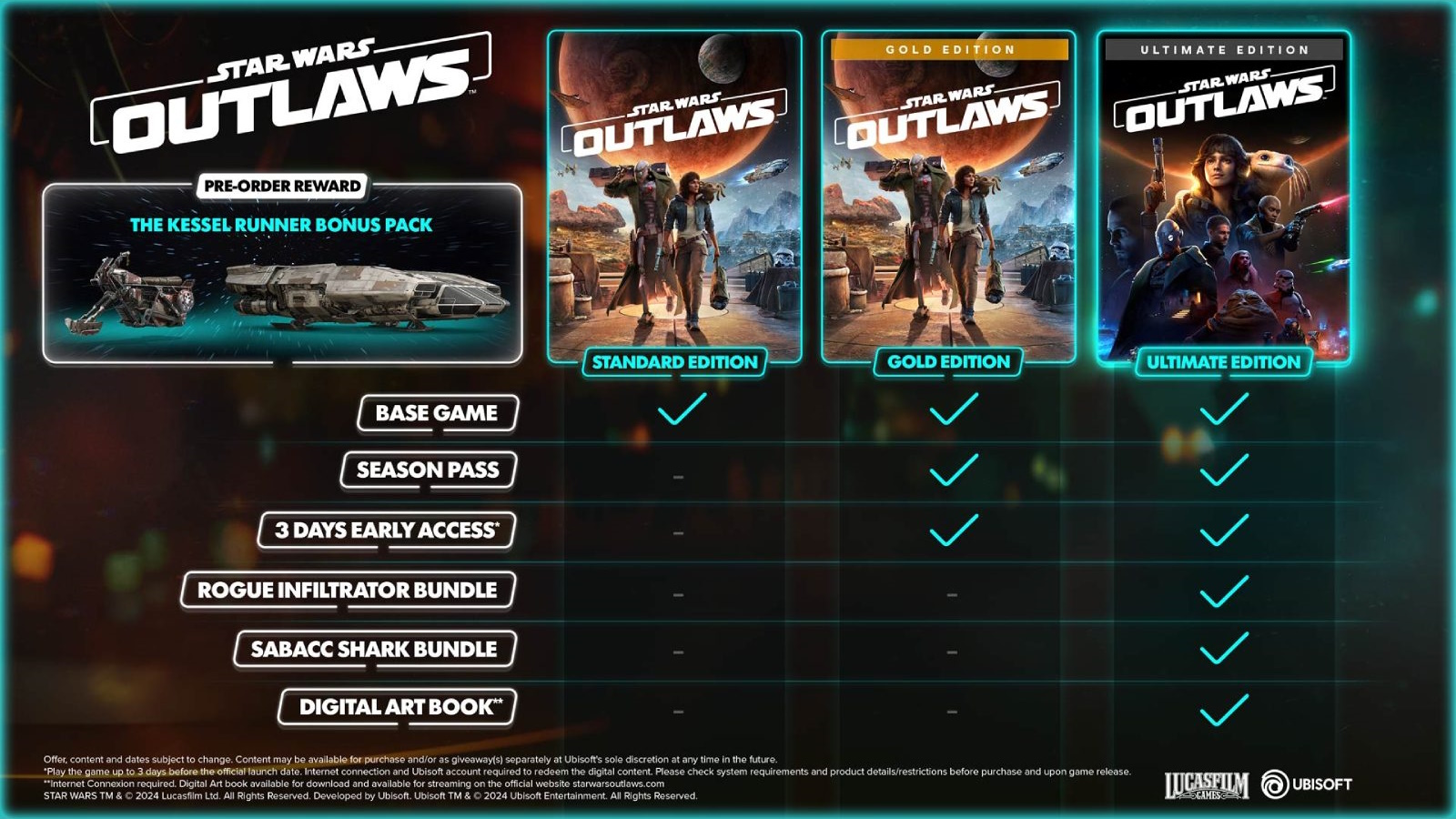

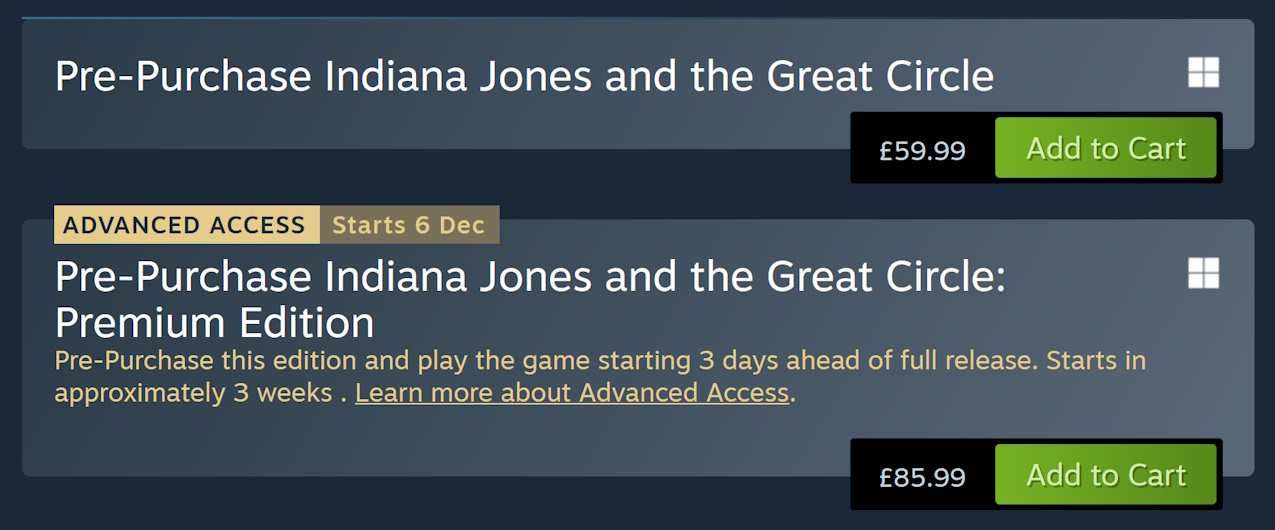

“Early access” means a game hasn’t been released yet, right? That’s what it’s supposed to mean, anyway – though some titles take the absolute piss by remaining in early access for a decade or more. But if you haven’t officially released your game, your focus ought to be on, y’know, finishing the game instead of working on DLC. Paid-for downloadable content for games that are still officially in “early access” is just awful.

Star Citizen is arguably the most egregious example of this. The game – from what I’ve seen – would barely qualify as an “alpha” version, yet reams of overpriced downloadable content is offered for sale. Some it exists in-game, but a lot of it is really just a promise; an I.O.U. from the developers, promising to build a ridiculously expensive spaceship if and when time permits.

Early access has a place in gaming, and I don’t want to see it disappear. But that place is with smaller independent projects seeking feedback, not massive studios abusing the model. Selling DLC that doesn’t exist for game that also doesn’t fully exist feels like a total piss-take, and given how often these things go horribly wrong, I’m surprised to see people still being lured in and falling for what can, at times, feel like a scam.

There have been some fantastic expansion packs going back decades, and I don’t object to DLC – even if it’s what I would usually call a pack of overpriced cosmetic items. But when the main game isn’t even out, and is supposedly still being worked on, I don’t think it’s unreasonable to say that charging money for DLC is wrong – these things should either be free updates or, if they’re definitely going to be sold separately, held in reserve until the game is launched.

“Hot Take” #2:

Bethesda Game Studios has basically made four good games… ever.

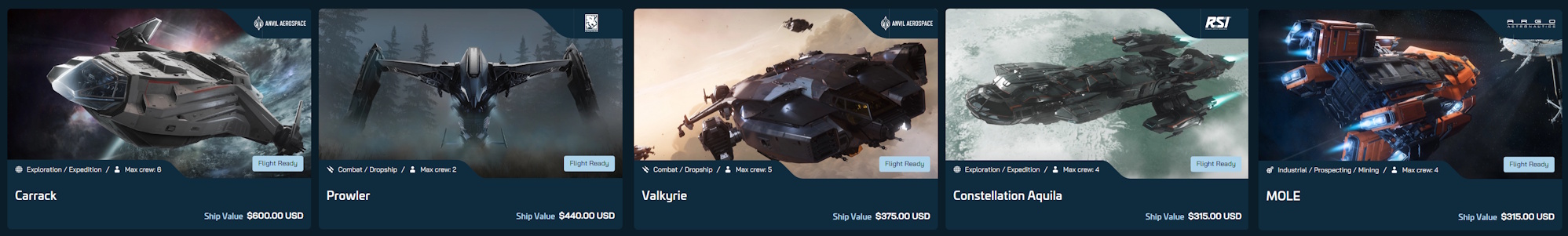

Morrowind, Oblivion, Fallout 3, and Skyrim. That’s it. That’s the list. From 2002 to 2011 – less than a decade – Bethesda Game Studios managed to develop and release four genuinely good games… but hasn’t reached that bar since. Bethesda has spent longer as a declining, outdated, and thoroughly mediocre developer than it ever did as a good developer. The studio is like the games industry equivalent of The Simpsons: fantastic in its prime, but what followed has been a long period of stagnation, decay, and mediocrity as they’ve been completely overtaken and eclipsed by competitors. To be blunt… I don’t see Starfield’s next (and probably last) expansion pack, or The Elder Scrolls VI, changing that.

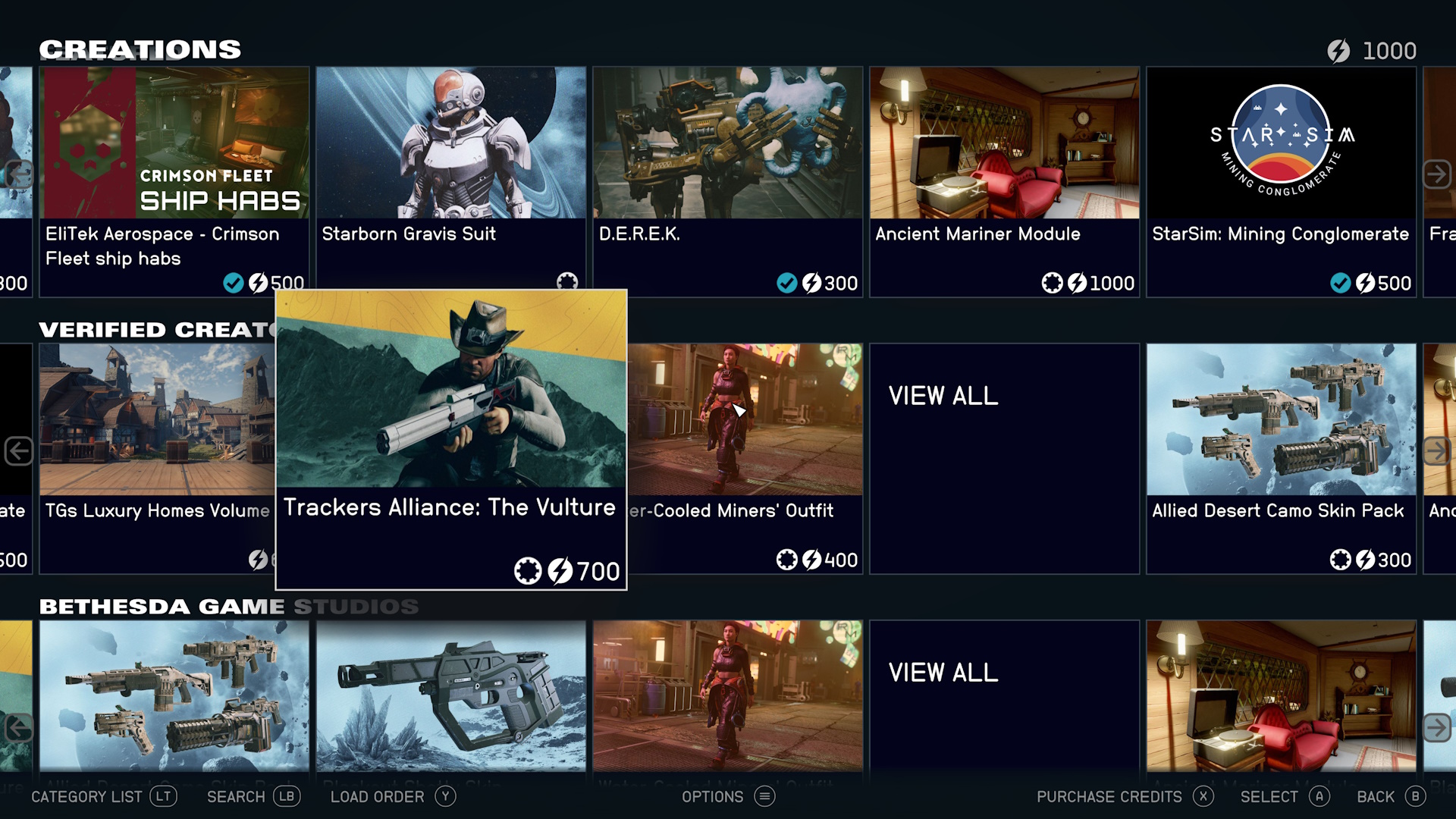

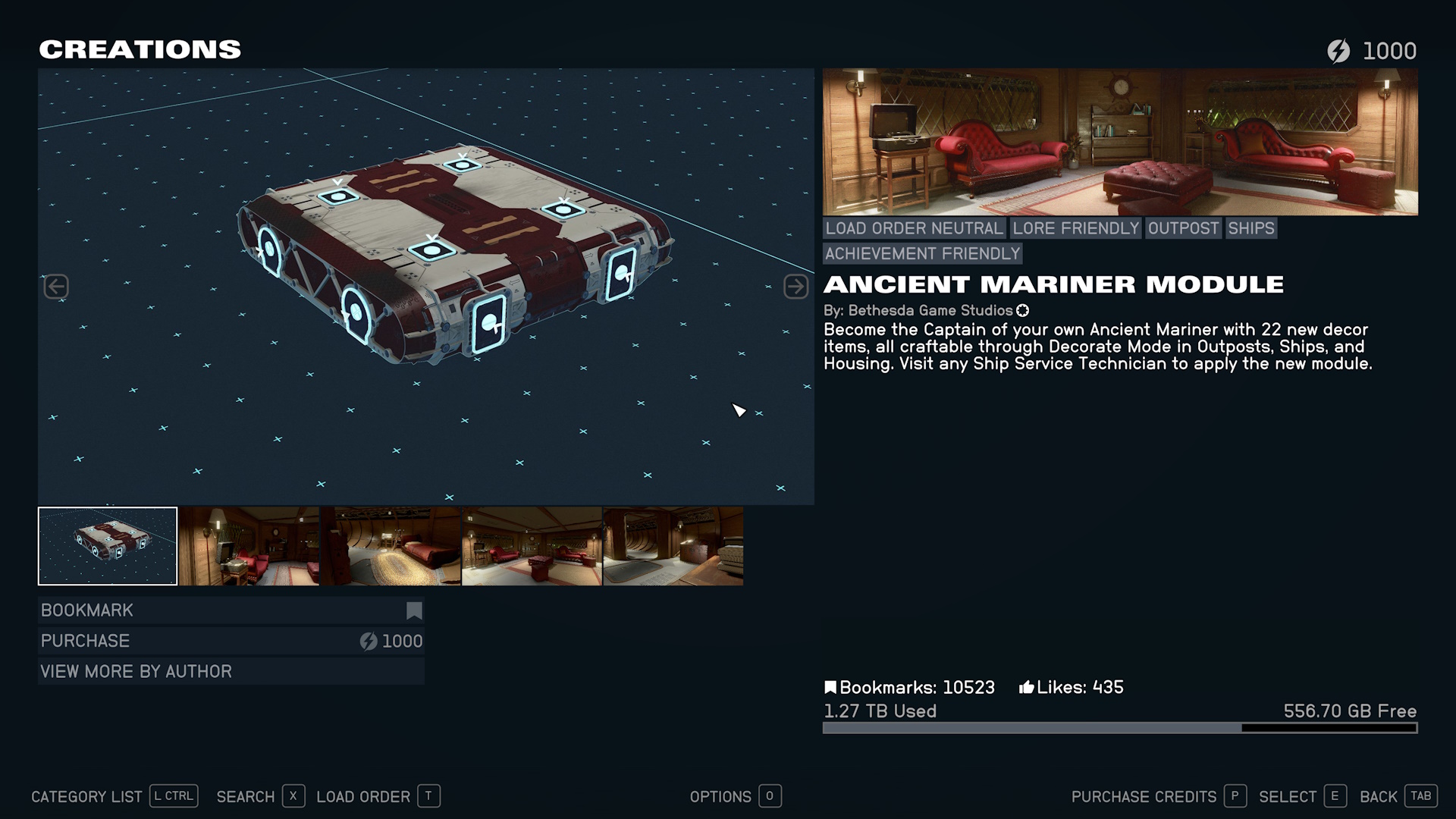

There is a retro charm to the likes of Arena and Daggerfall, and I won’t pretend that Fallout 4 didn’t have its moments. Even Starfield, with all of its limitations and issues, still had interesting elements, and the ship-builder was genuinely fun to use… at least at first. But since Skyrim in 2011, I would argue that Bethesda has been in decline. In fact, I believe Skyrim’s unprecedented success broke something fundamental in the way Bethesda’s executives and directors think about games. Gone was the idea of games as one-and-done things to be created and released. Replacing it was the concept I’ve called the “single-player live service,” where titles were transformed into “ten-year experiences” that could be monetised every step of the way.

As I said recently, I don’t have a lot of faith in The Elder Scrolls VI any more. It seems all but certain to contain another disgusting in-game marketplace for skins, items, and even entire questlines and factions. When there are so many other games to play that aren’t hideously over-monetised… why should I bother getting excited for The Elder Scrolls VI? Even worse, it’s being made in Bethesda’s “Creation Engine;” the zombified remains of software from thirty years ago that clearly isn’t up to the task and hasn’t been for a while.

Bethesda’s decline has been slow, and folks who skipped titles like Starfield and Fallout 76 might not be aware of just how bad things have gotten. Maybe I’m wrong, and maybe The Elder Scrolls VI will be a miraculous return to form. I hope so – I never want to root for a game to fail. But with so many other role-playing games out now or on the horizon… I just don’t see it measuring up as things stand. And in a way, I can’t help but feel it would be better in the long run if another studio were to take on the project.

“Hot Take” #3:

There won’t ever be another 1983-style “crash.”

Given the absolute state of modern gaming – at least insofar as many of the industry’s biggest corporations are concerned – I genuinely get where this feeling is coming from. But I think the people making this argument either don’t fully understand the 1983 crash, or don’t appreciate how massive gaming as a whole has become in the decades since then.

In short: in 1983, video games weren’t much more than pretty expensive digital toys. The home console market was relatively small, and like so many products over the years, it was genuinely possible that video games themselves could’ve been a flash in the pan; something comparable to LaserDisc, the hovercraft, or, to pick on a more modern example, Google Glass. All of these technologies threatened to change the world… but didn’t. They ended up being temporary fads that were quickly forgotten.

Photo: taylorhatmaker, CC BY 2.0 https://creativecommons.org/licenses/by/2.0, via Wikimedia Commons

Fast-forward to 2025. The games industry is massive. So many people play games in some form or another that the idea of a total market collapse or “crash” is beyond far-fetched. That isn’t to say there won’t be changes and shake-ups – whole companies could disappear, including brands that seem massive and unassailable right now. Overpriced games and hardware are going to be challenges, too. Changing technology – like generative A.I. – could also prove to be hugely disruptive, and there could be new hardware, virtual reality, and all sorts.

But a 1983-style crash? Gaming as a whole on the brink of disappearing altogether? It ain’t gonna happen! There is still innovation in the industry, though these days a lot of it is being driven by independent studios. Some of these companies, which are small outfits right now, could be the big corporations of tomorrow, and some of the biggest names in the industry today will almost certainly fall by the wayside. Just ask the likes of Interplay, Spectrum HoloByte, and Atari. But whatever may happen, there will still be games, there will still be big-budget games, and there will still be hardware to play those games on. Changes are coming, of that I have no doubt. But there won’t be another industry crash that comes close to what happened in ’83.

“Hot Take” #4:

Nintendo’s die-hard fans give the company way too much leniency and support – even for horribly anti-consumer shenanigans.

I consider myself a fan of Nintendo’s games… some of them, at least. I’ve owned every Nintendo console from the SNES to the first Switch, and unless something major comes along to dissuade me, I daresay I’ll eventually shell out for a Switch 2, too. But I’m not a Nintendo super-fan, buying every game without question… and some of those folks, in my opinion at least, are far too quick to defend the practices of a greedy corporation that doesn’t care about them in the slightest.

Nintendo isn’t much different from the likes of Ubisoft, Activision, Electronic Arts, Sony, Sega, and other massive publishers in terms of its business practices and its approach to the industry. But none of those companies have such a well-trained legion of die-hard apologists, ready to cover for them no matter how badly they screw up. Nintendo fans will happily leap to the defence of their favourite multi-billion dollar corporation for things they’d rightly criticise any other gaming company for. Price hikes, bad-value DLC, lawsuits against competitors or fans, underbaked and incomplete games… Nintendo is guilty of all of these things, yet if you bring up these points, at least in some corners of the internet, there are thousands of Nintendo fans piling on, shouting you down.

Obviously the recent launch of the Switch 2 has driven this point home for me. The console comes with a very high price tag, expensive add-ons, a paid-for title that should’ve been bundled with the system, an eShop full of low-quality shovelware, literally only one exclusive launch title, and over-inflated prices for its first-party games. But all of these points have been defended to the death by Nintendo’s super-fans; criticising even the shitty, overpriced non-entity Welcome Tour draws as much vitriol and hate as if you’d personally shat in their mother’s handbag.

Very few other corporations in the games industry enjoy this level of protection from a legion of well-trained – and pretty toxic – super-fans. And it’s just… odd. Nintendo has made its share of genuinely bad games. Nintendo has made plenty of poor decisions over the years. Nintendo prioritises profit over everything else, including its own fans and employees. Nintendo is overly litigious, suing everyone from competitors to its own fans. And Nintendo has taken actions that are damaging to players, families, and the industry as a whole. Gamers criticise other companies when they behave this way; Electronic Arts is routinely named as one of America’s “most-hated companies,” for instance. But Nintendo fans are content to give the corporation cover, even for its worst and most egregious sins. They seem to behave like fans of a sports team, insistent that “team red” can do no wrong. I just don’t understand it.

“Hot Take” #5:

“Woke” is not synonymous with “bad.”

(And many of the people crying about games being “woke” can’t even define the word.)

In some weird corners of social media, a game (or film or TV show) is decreed “woke” if a character happens to be LGBT+ or from a minority ethnic group. And if such a character is featured prominently in pre-release marketing material… that can be enough to start the hate and review-bombing before anyone has even picked up a control pad. The expression “go woke, go broke” does the rounds a lot… but there are many, many counter-examples that completely disprove this point.

Baldur’s Gate 3 is a game where: the player character can be any gender, and their gender is not defined by their genitals. Players can choose to engage in same-sex relationships, practically all of the companion NPCs are pansexual, and there are different races and ethnicities represented throughout the game world. But Baldur’s Gate 3 sold incredibly well, and will undoubtedly be remembered as one of the best games of the decade. So… is it “woke?” If so, why didn’t it “go broke?”

Many “anti-wokers” claim that they aren’t really mad about women in leading roles, minority ethnic characters, or LGBT+ representation, but “bad writing.” And I will absolutely agree that there are some games out there that are genuinely poorly-written, or which have stories I just did not care for in the least. The Last Of Us Part II is a great example of this – the game’s entire narrative was based on an attempt to be creative and subversive, but it hacked away at too many of the fundamentals of storytelling to be satisfying and enjoyable. But you know what wasn’t the problem with The Last Of Us Part II? The fact that one of its secondary characters was trans and another female character was muscular.

Good games can be “woke” and “woke” games can be good. “Woke” games can also be bad, either for totally unrelated reasons or, in some cases, because they got too preachy. But to dismiss a game out of hand – often without playing it or before it’s even launched – because some armchair critic on YouTube declared it to be “woke” is just silly. Not only that, but there are many games that contain themes, storylines, or characters that could be reasonably described as “woke” that seem to be completely overlooked by the very folks who claim it’s their mission to “end wokeness.” The so-called culture war is just a very odd thing, and it’s sad to see how it’s impacted gaming. I would never tell anyone they “must” play or not play certain games, but I think it’s a shame if people miss out on genuinely fun experiences because of a perception of some ill-defined political concept that, in most cases, doesn’t have much to do with the game at all.

So that’s it!

We’ve looked at a few more of my infamous “hot takes!” I hope it’s been a bit of fun… and not something to get too upset about! It’s totally okay to disagree, and one of the great things about gaming nowadays is that there’s plenty of choice. If you like a game that I don’t, or I enjoy a genre you find boring… that’s okay. If you’re a super-fan of something that I’m not interested in… we can still be friends. Even if we don’t agree politically, we ought to be able to have a civil and reasonable conversation without screaming, yelling, or name-calling!

Be sure to check out some of my other “hot takes.” I’ve linked a few other pieces below. And I daresay there’ll be more of these one day soon… I keep finding things in gaming to disagree with, for some reason. It must be because I’m getting grumpy in my old age; I’m just a big ol’ sourpuss!

Have fun out there, and happy gaming!

All titles mentioned above are the copyright of their respective publisher, developer, and/or studio. This article contains the thoughts and opinions of one person only and is not intended to cause any offence.

Links to other gaming “hot takes:”