A couple of years ago, I put together two lists of things I really dislike about modern video games – but somehow I’ve managed to find even more! Although there’s lots to enjoy when it comes to the hobby of gaming, there are still plenty of annoyances and dislikes that can detract from even the most pleasant of gaming experiences. So today, I thought it could be a bit of fun to take a look at ten of them!

Several of these points could (and perhaps one day will) be full articles or essays all on their own. Big corporations in the video games industry all too often try to get away with egregiously wrong and even malicious business practices – and we should all do our best to call out misbehaviour. While today’s list is somewhat tongue-in-cheek, there are major issues with the way big corporations in the gaming realm behave… as indeed there are with billion-dollar corporations in every other industry, too.

That being said, this is supposed to be a bit of fun. And as always, I like to caveat any piece like this by saying that everything we’re going to be talking about is nothing more than one person’s subjective take on the topic! If you disagree with everything I have to say, if you like, enjoy, or don’t care about these issues, or if I miss something that seems like an obvious inclusion to you, please just keep in mind that all of this is just the opinion of one single person! There’s always room for differences of opinion; as gamers we all have different preferences and tolerance levels.

If you’d like to check out my earlier lists of gaming annoyances, you can find the first one by clicking or tapping here, and the follow-up by clicking or tapping here. In some ways, this list is “part three,” so if you like what you see, you might also enjoy those older lists as well!

With all of that out of the way, let’s jump into the list – which is in no particular order.

Number 1:

Motion blur and film grain.

Whenever I boot up a new game, I jump straight into the options menu and disable both motion blur and film grain – settings that are almost always inexplicably enabled by default. Film grain is nothing more than a crappy Snapchat filter; something twelve-year-olds love to play with to make their photos look “retro.” It adds nothing to a game and actively detracts from the graphical fidelity of modern titles.

Motion blur is in the same category. Why would anyone want this motion sickness-inducing setting enabled? It smears and smudges even the best-looking titles for basically no reason at all. Maybe on particularly underpowered systems these settings might hide some graphical jankiness, but on new consoles and even moderately good PCs, they’re unnecessary. They make games look significantly worse – and I can’t understand why anyone would choose to play a title with them enabled.

Number 2:

In-game currencies that have deliberately awkward exchange rates.

In-game currencies are already pretty shady; a psychological manipulation to trick players into spending more real money. But what’s far worse is when in-game currencies are deliberately awkward with their exchange rates. For example, if most items on the storefront cost 200 in-game dollars, but I can only buy in-game dollars in bundles of 250 or 500. If I buy 250 in-game dollars I’ll have a few left over that I can’t spend, and if I buy 500 then I’ll have spent more than I need to.

This is something publishers do deliberately. They know that if you have 50 in-game dollars left over there’ll be a temptation to buy even more to make up the difference, and they know players will be forced to over-spend on currencies that they have no need for. Some of these verge on being scams – but all of them are annoying.

Number 3:

Fully-priced games with microtransactions.

If a game is free – like Fortnite or Fall Guys – then microtransactions feel a lot more reasonable. Offering a game for free to fund it through in-game purchases is a viable business model, and while it needs to be monitored to make sure the in-game prices aren’t unreasonable, it can be an acceptable way for a game to make money. But if a game costs me £65 up-front, there’s no way it should include microtransactions.

We need to differentiate expansion packs from microtransactions, because DLC that massively expands a game and adds new missions and the like is usually acceptable. But if I’ve paid full price for a game, I shouldn’t find an in-game shop offering me new costumes, weapon upgrades, and things like that. Some titles absolutely take the piss with this, too, even including microtransactions in single-player campaigns, or having so many individual items for sale that the true cost of the game – including purchasing all in-game items – can run into four or even five figures.

Number 4:

Patches as big as (or bigger than) the actual game.

This one kills me because of my slow internet! And it’s come to the fore recently as a number of big releases have been buggy and broken at launch. Jedi: Survivor, for example, has had patches that were as big as the game’s original 120GB download size – meaning a single patch would take me more than a day to download. Surely it must be possible to patch or fix individual files without requiring players to download the entire game all over again – in some cases more than once.

I’m not a developer or technical expert, and I concede that I don’t know enough about this topic on a technical level to be able to say with certainty that it’s something that should never happen. But as a player, I know how damnably annoying it is to press “play” only to be told I need to wait hours and hours for a massive, unwieldy patch. Especially if that patch, when fully downloaded, doesn’t appear to have actually done anything!

Number 5:

Broken PC ports.

As I said when I took a longer look at this topic, I had hoped that broken PC ports were becoming a thing of the past. Not so, however! A number of recent releases – including massive AAA titles – have landed on PC in broken or even outright unplayable states, plagued by issues that are not present on PlayStation or Xbox.

PC is a massive platform, one that shouldn’t be neglected in this way. At the very least, publishers should have the decency to delay a PC port if it’s clearly lagging behind the console versions – but given the resources that many of the games industry’s biggest corporations have at their disposal, I don’t see why we should accept even that. Develop your game properly and don’t try to launch it before it’s ready! I’m not willing to pay for the “privilege” of doing the job of a QA tester.

Number 6:

Recent price hikes.

Inflation and a cost-of-living crisis are really punching all of us in the face right now – so the last thing we need are price hikes from massive corporations. Sony really pissed me off last year when they bragged to their investors about record profits before turning around literally a matter of weeks later and announcing that the price of PlayStation 5 consoles was going to go up. This is unprecedented, as the cost of consoles usually falls as a console generation progresses.

But Sony is far from the only culprit. Nintendo, Xbox, Activision Blizzard, TakeTwo, Electronic Arts and practically every major corporation in the games industry have jacked up their prices over the last few years, raising the basic price of a new game – and that’s before we look at DLC, special editions, and the like. These companies are making record-breaking profits, and yet they use the excuse of “inflation” to rip us off even more. Profiteering wankers.

Number 7:

The “release now, fix later” business model is still here.

I had hoped that some recent catastrophic game launches would have been the death knell for the “release now, fix later” business model – but alas. Cyberpunk 2077 failed so hard that it got pulled from sale and tanked the share price of CD Projekt Red… but even so, this appalling way of making and launching games has persisted. Just in the first half of 2023 we’ve had titles like Hogwarts Legacy, Redfall, Jedi: Survivor, Forspoken, and The Lord of the Rings: Gollum that arrived broken, buggy, and unplayable.

With every disaster that causes trouble for a corporation, I cross my fingers and hope that lessons will be learned. But it seems as if the “release now, fix later” approach is here to stay. Or at least it will be as long as players keep putting up with it – and even defending it in some cases.

Number 8:

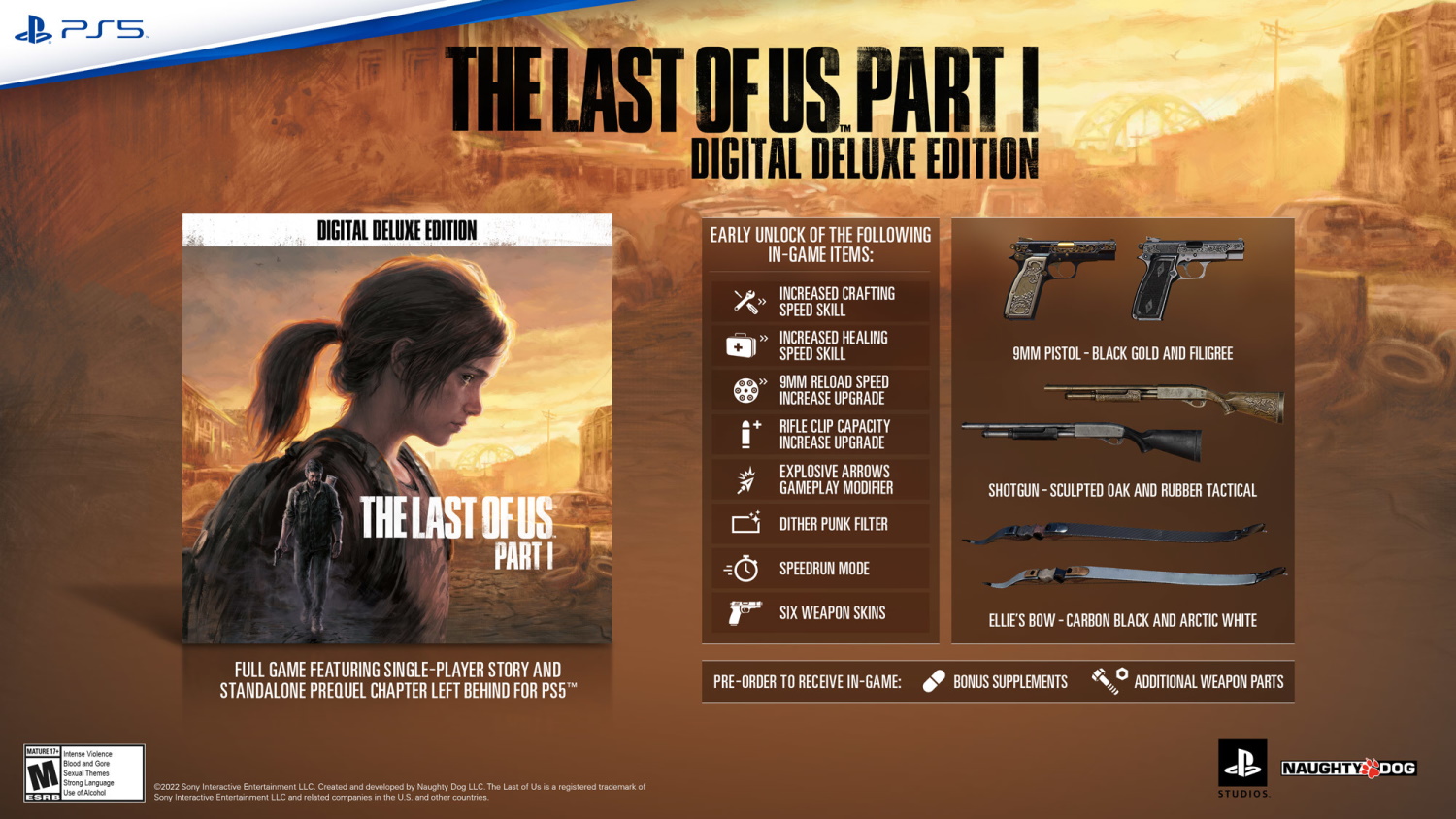

Day-one DLC/paywalled day-one content.

It irks me no end when content that was clearly developed at the same time as the “base version” of a game is paywalled off and sold separately for an additional fee. The most egregious example of this that comes to mind is Mass Effect 3′s From Ashes DLC, which was launched alongside the game. This DLC included a character and missions that were completely integrated into the game – yet had been carved out to be sold separately.

This practice continues, unfortunately, and many modern titles release with content paywalled off, even if that content was developed right along with the rest of the game. Sometimes these things are designed to be sold as part of a “special edition,” but that doesn’t excuse it either. Even if all we’re talking about are character skins and cosmetic content, it still feels like those things should be included in the price – especially in single-player titles. Some of this content can be massively overpriced, too, with packs of two or three character skins often retailing for £10 or more.

Number 9:

Platform-exclusive content and missions.

Some titles are released with content locked to a single platform. Hogwarts Legacy and Marvel’s Avengers are two examples that come to mind – and in both cases, missions and characters that should have been part of the main game were unavailable to players on PC and Xbox thanks to deals with Sony. While I can understand the incentive to do this… it’s a pretty shit way of making money for a publisher, and a pretty scummy way for a platform to try to attract sales.

Again, this leaves games incomplete, and players who’ve paid full price end up getting a worse experience or an experience with less to do depending on their platform of choice. That’s unfair – and it’s something that shouldn’t be happening.

Number 10:

Pre-orders.

“You know what you get for pre-ordering a game? A big dick in your mouth.”

Pre-ordering made sense – when games were sold in brick-and-mortar shops on cartridges or discs. You wanted to guarantee your copy of the latest big release, and one way to make sure you’d get the game before it sold out was to pre-order it. But that doesn’t apply any more; not only are more and more games being sold digitally, but even if you’re a console player who wants to get a game on disc, there isn’t the same danger of scarcity that there once was.

With so many games being released broken – or else failing to live up to expectations – pre-ordering in 2023 is nothing short of stupidity, and any player who still does it is an idiot. It actively harms the industry and other players by letting corporations get away with more misbehaviour and nonsense. If we could all be patient and wait a day or two for reviews, fewer games would be able to be launched in unplayable states. Games companies bank on a significant number of players pre-ordering and not cancelling or refunding if things go wrong. It’s free money for them – and utterly unnecessary in an age of digital downloads.

So that’s it!

We’ve gone through ten of my pet peeves when it comes to gaming. I hope this was a bit of fun – and not something to get too upset over!

The gaming landscape has changed massively since I first started playing. Among the earliest titles I can remember trying my hand at is the Commodore 64 title International Soccer, and the first home console I was able to get was a Super Nintendo. Gaming has grown massively since those days, and the kinds of games that can be created with modern technology, game engines, and artificial intelligence can be truly breathtaking.

But it isn’t all good, and we’ve talked about a few things today that I find irritating or annoying. The continued push from publishers to release games too early and promise patches and fixes is particularly disappointing, and too many publishers and corporations take their greed to unnecessary extremes. But that’s the way the games industry is… and as cathartic as it was to get it off my chest, I don’t see those things disappearing any time soon!

All titles mentioned above are the copyright of their respective developer, studio, and/or publisher. This article contains the thoughts and opinions of one person only and is not intended to cause any offence.