Spoiler Warning: Beware spoilers for the following games: Batman: Arkham Knight, Mass Effect 3, Halo Infinite, and The Last of Us Part II.

Microsoft’s latest round of layoffs has really hammered home how shaky some parts of the games industry feel as the 2020s roll along. Big games – like the remake of Perfect Dark, Rare’s long-awaited Everwild, and an unnamed title from ZeniMax Online – have all been canned as Microsoft “restructures” its gaming division… despite making literally more money than it ever has in its corporate existence. And all of this comes after some ridiculous studio closures barely twelve months ago. But the Xbox situation got me thinking… which other games and studios could be in danger?

So that rather depressing topic is what we’re going to tackle today. To be clear: I don’t think the entire games industry is heading for some kind of repeat of the 1983 “crash.” Gaming is too big nowadays, and there are so many people playing games, that the idea of gaming as a whole ever disappearing or experiencing that kind of huge slowdown just doesn’t seem feasible anymore. So to reiterate that last point: I am not predicting an industry-wide “crash.” But there are multiple publishers and developers that I believe are in danger – and one badly-received game could, in some cases, lead to their exit from the industry altogether.

This piece was prompted by the Microsoft and Xbox news, but it’s not only Microsoft-owned studios that could be on the chopping block. There are issues at outfits owned by Sony, too, as well as third-party publishers and developers.

A few caveats before we go any further. Firstly, if you or someone you know works at one of these companies, please know that I don’t mean this as any kind of attack or slight against you or the quality of your work. This industry can be brutal, and as a commentator/critic, what I’m doing is sharing my view on the situation. What I’m categorically *not* doing is saying any of these companies “should” be shut down. I really don’t want to see more people in the industry put out of work. I spent a decade working in the games industry, and I worked for companies that went through tough times. I know what it’s like to feel like your job is on the line… and the last thing I want to do is rub salt in the wound or make things worse.

Secondly, I have no “insider information” from any of these developers or publishers. I’m looking in from the outside as someone who hasn’t worked in the industry for more than a decade at this point. Finally, all of this is the entirely subjective, not objective, opinion of just one person. If you disagree with my take, think I’ve got it wrong, or you’re just convinced that a company’s next game is sure to be an absolute banger… that’s totally okay. Gamers can be an argumentative lot sometimes, but I like to believe there’s enough room in the wider community for polite discussion and differences of opinion.

With all of that out of the way, let’s get started.

Endangered Studio #1:

Halo Studios

Halo Studios, formerly known as 343 Industries, is Microsoft’s in-house development team working on the Halo franchise. But… well, it wouldn’t be a stretch to say that 343/Halo Studios has never released a *big* hit. The closest they’ve come, in more than a decade, was remastering the original Halo games… and even then, we have to give the huge caveat of the bugs and performance issues that plagued early versions of the remasters.

Whether we look at Halo 4, Halo 5, Halo Infinite, the Halo Wars spin-off, or the mobile games… Halo Studios hasn’t exactly taken the gaming world by storm. Infinite was supposed to be the Xbox Series X’s “killer app;” a launch title to really sell people on the new console and make it a must-buy, just as the original Halo: Combat Evolved had done some twenty years earlier. That didn’t happen, and the reception to that game – including from yours truly – was pretty mixed.

Although Halo Studios has been hit by Microsoft’s layoffs in recent weeks, and a recent leak suggested that “no one at the studio is happy” with the state of their next title right now, I still think Xbox will give them another chance. The Halo series and Xbox are inseparable, at least in the minds of some players, and the name recognition and series reputation still count for something. But I don’t think those things will count indefinitely, so if the next Halo game isn’t a smash hit, Halo Studios will be in trouble.

This also comes after the failure of the Halo TV series. I happened to think the show was decent for what it was, but I understand where a lot of the criticism was coming from. That hasn’t helped Halo Studios’ case, though, and one of the best opportunities to grow the brand was squandered.

As a final note: every story has a natural end. I would suggest, perhaps, that Halo – or at least the Master Chief’s story – has pushed past that point. Recent narratives felt overly complicated, and I felt that Halo Studios was having to invent increasingly silly reasons for why the Master Chief was still fighting the Covenant and the Flood. Maybe the franchise just needs a break?

Endangered Studio #2:

Ubisoft

Ubisoft hasn’t been in great shape for quite some time. I think it’s fair to say that Ubisoft’s open world level design has stagnated, and a lot of players have kind of hit the wall when it comes to that style of game. But because the studio has doubled-down on that formula and that way of making games… it might be hard to find a way back.

Ubisoft has slapped its open world style on franchises like Assassin’s Creed, Far Cry, Avatar, and even Star Wars… but many recent games have felt pretty repetitive; the same thing every time, just with a different coat of paint. I’m on the record saying that the open world formula doesn’t work for a lot of games, and although I don’t play a ton of Ubisoft titles… I think the repetitiveness of their games is a contributing factor, at least. Open worlds can be fun, but they can also be bloated and uninspired.

Earlier in 2025, a lot of folks seemed to be saying that Ubisoft’s financial situation basically meant that Assassin’s Creed: Shadows was the company’s “last chance.” I’m not sure I’d have gone that far myself; there are clearly other projects in the pipeline that at least have some potential. But Shadows seems to have been a modest success, at least, which has probably bought the company some time. A remake of the popular Assassin’s Creed: Black Flag could be a much-needed boost, too, if it succeeds at grabbing a new audience.

But in the longer-term, Ubisoft needs to try new things. Its open world formula worked for a while, but repetitiveness and stagnation seem to have crept in. There are only so many open world “collect-a-thons” that anyone can be bothered to play, and if it feels like the same game is just being given a new skin every time… that’s not a lot of fun, in the end. Just Dance can’t keep the company afloat forever, so something’s gotta change, and soon.

I’m still crossing my fingers for that Splinter Cell remake, though!

Endangered Studio #3:

Nintendo

Bear with me on this. Nintendo is a titan of the games industry… but it’s also a more vulnerable company than folks realise. I don’t think people fully appreciate how big of a risk the Switch 2 has been with its high price, sole exclusive launch title, and repetitive design and branding. The console may have sold well in its first couple of weeks on sale – though, as I noted, it didn’t seem to have sold out everywhere – but that’s to be expected from a company with a well-trained legion of super-fans! The real question is still whether casual players, families, and people less connected to the gaming world will be willing to shell out for a console that’s now competing with the PlayStation 5 and Xbox Series X in terms of price.

I don’t know anyone – not one single person – who only owned a Nintendo Switch as their sole gaming device. I’m sure some people do, but most folks I spoke to bought a Switch for one of three reasons: to play a handful of Nintendo exclusives, like Mario Kart 8 and Animal Crossing: New Horizons, to play some of their favourite games in a handheld format, or for their children to play some kid-friendly titles. The Switch was well-positioned for any of those use cases… the Switch 2, at its higher price point, is less so.

In 2013/14, when the Wii U was clearly faltering, Nintendo still had the 3DS to turn a profit and keep its corporate head above water. But now, the company is all-in on the Switch 2… meaning there’s less room for manoeuvre if things don’t go to plan. Because of Nintendo’s unique position in the industry, if its hardware falters it’s gonna be in big trouble, and the Switch 2 represents a departure from a successful business model. The Wii, the Switch, and Nintendo’s handhelds have all been well-positioned and well-priced to attract casual players… I’m not so sure the Switch 2 is. The company has some cash in reserve to keep going for a short while… but not indefinitely.

For those of you screaming that “it’ll never happen!!1!” I would remind you of Sega’s unceremonious exit from the console market just after the turn of the millennium. If you’d asked any gamer in the late ’90s what the future held for Sega, no one would’ve predicted that the Dreamcast’s failure would lead to the company shutting down its hardware division altogether. Nintendo is at the tippy-top of the games industry, and the Switch has been a phenomenally successful console. But its position is more precarious than people realise, and it would only take one console failure to throw the company into chaos. To be clear: I don’t necessarily think that Nintendo would just shut down and that would be that… but a Sega-style exit from the hardware market, and far fewer Nintendo games being produced, could happen. Never say never.

Endangered Studio #4:

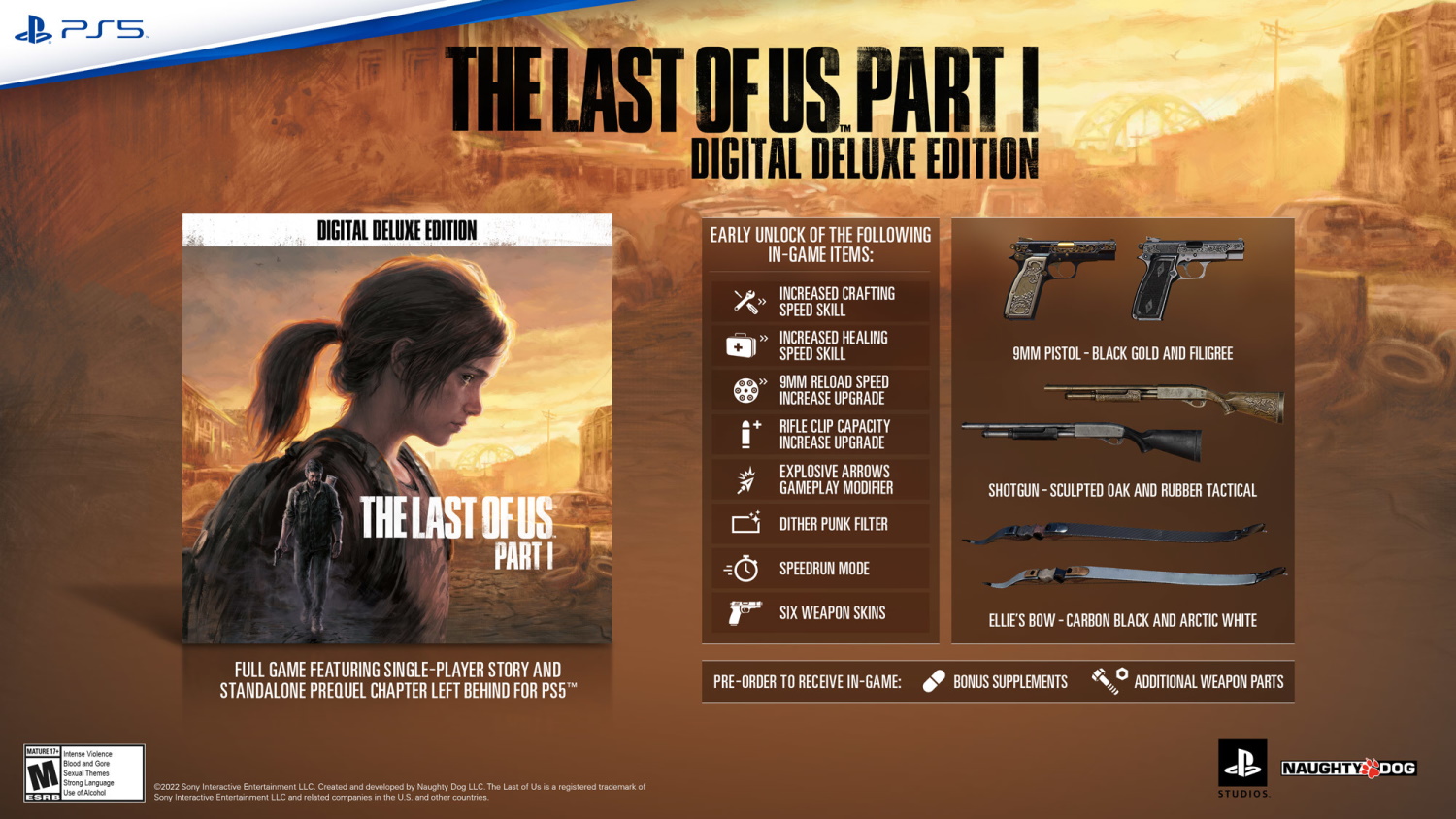

Naughty Dog

Naughty Dog developed Crash Bandicoot for the first PlayStation, the Jak and Daxter games, the Uncharted series, and The Last of Us. Although The Last of Us Part II proved controversial (I once said a 3/10 seemed like a fair score for that game), it seems to have sold pretty well, and the first title has been remastered… twice. But when Naughty Dog premiered a trailer for Intergalactic: The Heretic Prophet, the reception was less than glowing.

That game seems like it’s still a way off, too, and it might realistically launch as one of the final titles of the PlayStation 5 generation. But with the Uncharted series seemingly on the back burner, and after the controversy surrounding The Last of Us Part II… can the studio survive if Intergalactic underwhelms? I think there’s a very real possibility that Sony would be swift and brutal in that event.

It’s silly to pre-judge any title based on a single trailer that didn’t show so much as a frame of actual gameplay. Intergalactic: The Heretic Prophet might have a silly, clunky name… but we really don’t know much about its story or what it’ll feel like to play. Naughty Dog has pedigree (get it?) so I think there are reasons to be optimistic about their next game. But I can also see a world in which Intergalactic doesn’t succeed in the way Sony is surely demanding.

There are some upcoming games that are generating a ton of buzz and excitement. So far, Intergalactic isn’t amongst them. Maybe that will change as we get closer to the game’s launch and the marketing campaign kicks off. But maybe it’ll always be one of those games that just… didn’t do much for a lot of people. If that’s the case, Naughty Dog could be in trouble.

Endangered Studio #5:

Turn 10

Turn 10 are the folks behind Forza Motorsport. Or they were. As of July 2025, the Motorsport series seems to be going on hiatus, with Turn 10 suffering significant layoffs. The spin-off Forza Horizon series had been developed by another Microsoft subsidiary: Playground Games. But with Playground working on the new Fable title, it seems as if Turn 10 might be working on Forza Horizon 6 in the months ahead.

The Forza Horizon games are a ton of fun… but they’re also more arcadey, and the open world design isn’t Turn 10’s style. I can’t help but feel the studio only still exists after Forza Motorsport’s disappointment because Microsoft needs someone to take over the Horizon brief now that Playground Games is busy with Fable. After Forza Horizon 6 launches, if the main Motorsport brand is still on the back burner… what could Turn 10 realistically do?

If Xbox is going to persevere with its home consoles in the future – and I suspect that it will – then those consoles will need at least one proper racing game. Turn 10 had been providing that for the brand since 2005, back when the first Forza Motorsport launched on the original Xbox. There are third-party racing games, of course, and Microsoft has several on Game Pass, including rally titles, Formula 1 games, and more. But Forza should be a genuine competitor to Sony’s Gran Turismo series, and again, it should be giving players an incentive to consider picking up an Xbox console.

With Turn 10’s main series seemingly shut down, at least for the foreseeable future, and after having already suffered with layoffs, I’m not sure where the studio finds a successful future. Maybe if Forza Horizon 6 knocks it out of the park… but even then, I could see Microsoft returning that series to Playground Games.

Endangered Studio #6:

Bethesda Game Studios

To be clear: we’re talking about Bethesda the developer, not all of the studios under Bethesda’s publishing umbrella. There are several factors here, so let’s go over all of them. Starfield was a disappointment and its DLC didn’t salvage the project. Fallout 4 and Fallout 76, despite achieving success in recent years, launched to controversy. The Elder Scrolls VI is still a ways off, which has pushed a potential Fallout 5 to the mid-2030s or beyond. Fallout 4 and Fallout 76 are thus the only Fallout titles that Microsoft can push to players enamoured with the Fallout TV series.

For me, this boils down to the success or failure of The Elder Scrolls VI. If that game truly lives up to the hype and reaches the high bar set by Skyrim, then Bethesda will be okay and will continue developing games for years to come. If it doesn’t, and it ends up closer in reputation and sales to Starfield… that could be it. Curtains. Microsoft will retain the studio’s various IP, but could conceivably distribute the ones that still have potential to other development teams. Speaking of which…

With the Fallout TV show proving to be a hit, it’s pretty clear that Microsoft is hankering for a new game. There have been all kinds of rumours, with a Fallout 3 remaster seemingly the only one that’s guaranteed at this stage. But could Microsoft tap one of its other developers to make another Fallout spin-off, or perhaps something like a New Vegas remaster? If that were to happen, and if that hypothetical game were to eclipse Bethesda’s entries in the long-running series, that could be another nail in Bethesda’s coffin. Bethesda only has two well-known franchises under its belt, so if one of those were taken away – even on an alleged “temporary” basis – that could be hugely symbolic.

Here’s my take: Bethesda made some great games in the 2000s, but has shown absolutely no ability to move with the times in the almost fifteen years since Skyrim. The studio’s leaders seem to have bought into their own hype, believing that every game they develop will automatically be as well-received as Skyrim… and can be heavily-monetised without repercussions. There is still merit in the original Bethesda formula; an open-world game that turns players loose and opens up factions, questlines, and exploration. But other studios are doing similar things… and doing them way better. Bethesda feels like a bit of an outdated dinosaur, still clinging to Skyrim’s success more than a decade later. One more poorly-received game could be the end of the line.

Endangered Studio #7:

Bungie

We talked about the Halo series a moment ago, but that franchise’s new developer isn’t the only one in trouble. The originators of the Halo franchise, Bungie, are in dire straits right now, and could be only a year or so away from closure. The Destiny games may have sold reasonably well, but I don’t think it’s unfair to say that the whole “live service” thing didn’t exactly go to plan for Bungie. Then came the development of Marathon… something I talked about a few weeks ago.

Marathon was in a world of trouble after a seriously underwhelming closed playtest left critics and fans feeling like the game needed a lot of work. Then came the news that Bungie had – not for the first time – plagiarised a whole bunch of art assets for the game without payment or credit to the artist. These pieces quite literally define Marathon’s “quirky” visual style… which was pretty much the only thing the game had going for it.

Sony recently acquired Bungie for what many have argued was an overly inflated price. A delay to Marathon has recently been announced, but any goodwill or positive buzz that the game could’ve had has entirely evaporated at this point. It’s at a point where even a total overhaul won’t be enough; Marathon is pretty much dead on arrival, even after the delay. So… what happens to Bungie if that’s the case?

Sony can be just as brutal as everyone else when it comes to killing off underperforming studios. Just ask Firewalk, Pixelopus, Bigbig Studios, or London Studio. Bungie should not consider itself safe simply by virtue of its name or its high price tag… if Marathon fails, which it inevitably will, there are gonna be some tough questions asked by Sony. If Bungie can’t prove that they have something big lined up… that could be it.

Endangered Studio #8:

BioWare

Mass Effect: Andromeda. Anthem. Dragon Age: The Veilguard. BioWare has endured basically a decade of failures since the launch of Dragon Age: Inquisition, and it’s difficult to see Electronic Arts being willing to put up with another title that doesn’t live up to expectations. And I’m afraid there are serious questions about the studio’s next project: a sequel to the beloved Mass Effect trilogy.

I have a longer piece in the pipeline that I’ve been working on for a while about the importance of endings – and how, in the modern entertainment industry, very few stories are allowed to come to a dignified, natural end. The Mass Effect trilogy, with its buildup to the defeat of the Reapers, is an example of that… and it’s hard to see how telling another story in that universe won’t feel tacked-on, repetitive, or underwhelming in comparison to what’s come before. That was a big part of the Andromeda problem, in my opinion: after literally saving the galaxy, there’s basically nowhere for Mass Effect to go.

I don’t buy the criticisms of Dragon Age: The Veilguard failing because it was “too woke.” I think a lot of armchair critics seized on a single line from one character and tried to make the game all about that. But there were clearly issues with The Veilguard, not least its stop-start development, multiple changes in focus, and deviation from the art style of the earlier games. I hope BioWare has learned something from that experience… but, to be blunt, they should’ve learned those lessons already from Andromeda and Anthem.

I will almost certainly play Mass Effect 4. So BioWare can take comfort in the fact that they have at least one guaranteed sale right here! But… am I optimistic? I’m curious, sure, and I want the game to be good. But I also can’t shake the feeling that it’s going to be a story that’s just going to struggle to make the case for itself. Why, after Shepard beat the Reapers, do I need to see this new story? What’s going to be the hook? And without that… will it be worth playing? This is surely BioWare’s absolutely final chance, and with EA notorious for shutting down underperforming studios, everything is now riding on Mass Effect.

Endangered Studio #9:

Firaxis Games

Like BioWare above, Firaxis is on a bit of a weak run right now. XCOM: Chimera Squad underperformed on PC, leading to its console port being cancelled. And Marvel’s Midnight Suns was also considered a disappointment by parent company Take-Two Interactive. Then we come to this year’s Civilization VII, which is struggling right now. Civ VII is currently underperforming, with players seemingly preferring to stick with Civ VI or even Civ V, and there’s criticism of various aspects of the game – not least its three-era structure.

I believe Civilization VII has potential, but there’s clearly a limited window of time to really showcase that potential before panic sets in. At time of writing, there have only been a couple of significant updates to the base game, which launched almost six months ago. Players are still calling on Firaxis to patch bugs, rebalance key features, and add more to the game… and many of those players seem to have drifted back to Civ VI while they wait.

Other “digital board games” inspired by the venerable Civilization series have been eating Firaxis’ lunch, too. They don’t have the genre all to themselves any more, and I think we’re seeing the limitations of releasing a partial game, then hoping to sell expensive DLC to patch the holes. Civ VI did that, too, but there was arguably a stronger foundation to build upon and a fun base game to get players interested in the DLC in the first place.

I suspect Firaxis will get another chance. Even if work on Civilization VII were to end sooner than expected, 2K still recognises the strength of the series and its name recognition. But if a hypothetical Civ VIII or some other sequel or spin-off were to flop, too? That’s when Firaxis could be in real trouble.

Endangered Studio #10:

Rocksteady Studios

No, not Grand Theft Auto developers Rockstar, we’re talking about Rocksteady – the team behind the Batman: Arkham series and last year’s critically panned Suicide Squad: Kill the Justice League. In 2015, Arkham Knight suffered horribly with a ridicululously poor PC port, but the Arkham series has been otherwise popular and well-received, especially by Batman fans. But in 2024, Suicide Squad: Kill the Justice League was not, and left many players wondering how such a bad game could’ve taken Rocksteady such a long time to craft.

The bottom line is this: Kill the Justice League has lost parent company Warner Bros. Games more than $200 million. That’s… well, that’s not exactly great news when you’re trying to keep the lights on! These live service types of games are notorious for being expensive flops in a lot of cases, and what often follows an expensive, poorly-reviewed title is a studio closure.

There are rumours that Rocksteady has already been laying off staff, first in the QA department, and later in other technical fields, too. The studio also has no new game on its schedule at time of writing; it seems some staff are still working on Kill the Justice League in supporting roles, while others may be working to assist Portkey Games with a new version of Hogwarts Legacy. Again, that doesn’t bode well for the studio.

Practically all of the studios we’ve talked about today were once well-regarded and had at least some popular and successful titles in their back catalogues. But with the Arkham series having wrapped up a decade ago, I don’t think its lingering goodwill will be enough to save Rocksteady. Kill the Justice League was a game outside of the studio’s area of expertise, seemingly forced on them by Warner Bros. Games, and it sucks that they couldn’t stick to making the kinds of single-player titles at which they excelled.

So that’s it.

We’ve talked about a few developers and publishers that *could* be in danger in the months and years ahead.

As I said at the beginning: I’m never rooting for anyone to fail. Well, except really low-quality shovelware or games with abusive gambling baked in! But those obvious exceptions aside, I don’t want to see games fail or studios closed down, and I especially don’t want to see hard-working developers and other industry insiders losing their jobs. There’s more than enough of that going around without adding to it.

But as a critic and commentator who talks about gaming, I wanted to share my opinion on these studios in light of what’s been going on in the games industry. There are plenty of examples of high-profile failures, collapses, and shutdowns. Whether we’re talking about Atari, Interplay, most of Maxis, Sega, THQ, Lionhead, Acclaim, or Neversoft, one thing is clear: being a well-known brand with a good reputation isn’t enough. The games industry is cutthroat, and not all companies – not even those that seem to have scaled the heights and reached the very top of the gaming realm – can be considered safe.

Photo: taylorhatmaker, CC BY 2.0 https://creativecommons.org/licenses/by/2.0, via Wikimedia Commons

Maybe I’m wrong about some or all of these companies – and in a way, I hope that I am. But at the same time, gaming is like any other industry and it needs innovation. If the same companies dominate the gaming landscape forever, things will quickly stagnate. What gives me hope is that there are plenty of smaller studios producing new and innovative titles, and some of them will go on to be the “big beasts” of tomorrow.

So I hope this has been… well, not “fun,” but interesting, at any rate. And please check back here on Trekking with Dennis, because there’s more gaming content and coverage to come!

All titles discussed above are the copyright of their respective developer, studio, and/or publisher. Some screenshots and promotional artwork courtesy of IGDB. This article contains the thoughts and opinions of one person only and is not intended to cause any offence.